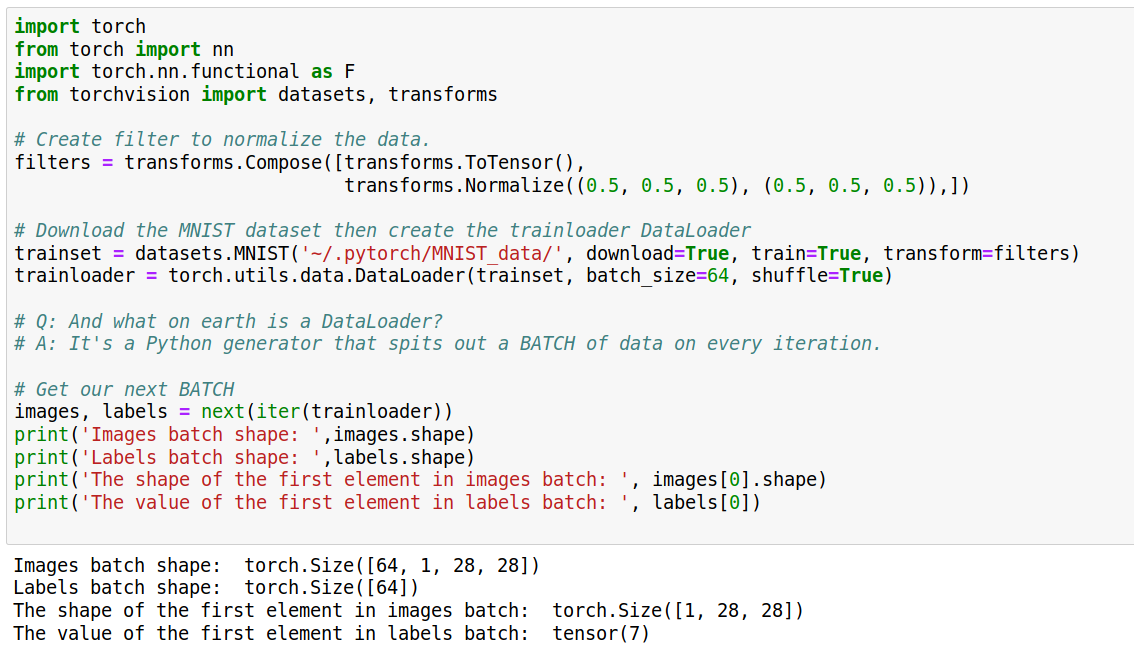

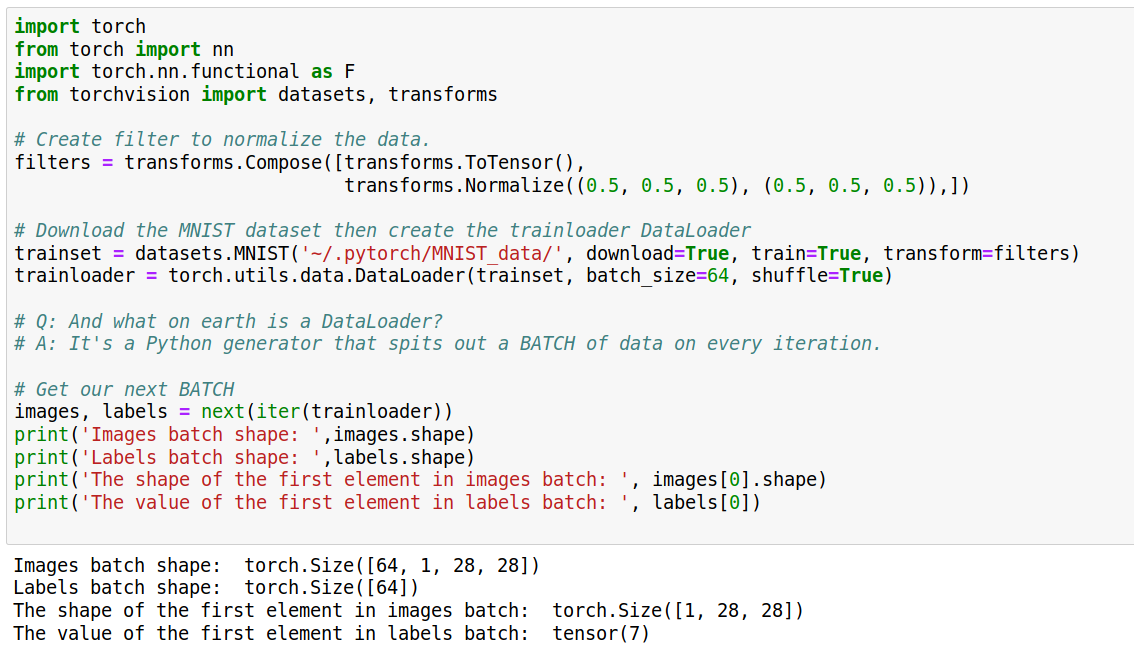

Before grabbing your data it helps to first understand it. The classic MNIST digit data is composed of lot grayscale images measuring 28 X 28 pixes along with the labels. This is important to know because we need to figure out what transforms should be applied as we bring in the data.

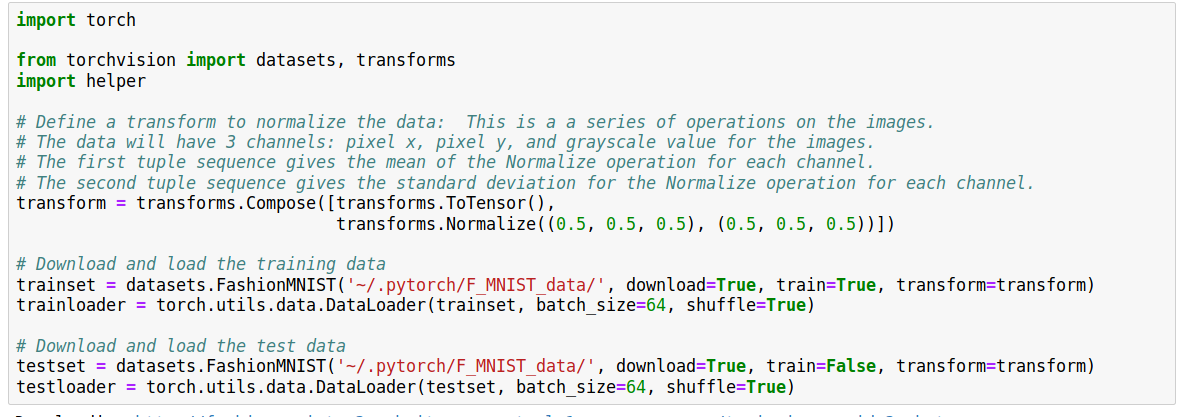

The filters variable below is usually labeled “transform”, which doesn’t do it justice: We take raw data and we filter it to suit our needs. Notice that we grab and transform all in one shot by way of the dataset class. Usually we would also be grabbing a testset as well like:

testset = datasets.MNIST('~/.pytorch/MNIST_data/', download=True, train=False, transform=filters)

followed by:

testloader = torch.utils.data.DataLoader(testset, batch_size=64, shuffle=True)

These datasets are used to create the DataLoader which is a Python generator that returns a batch of the data, in this case a batch of 64 images.

Once we have our to DataLoaders, one for training and the other for testing, we are ready for the rest.

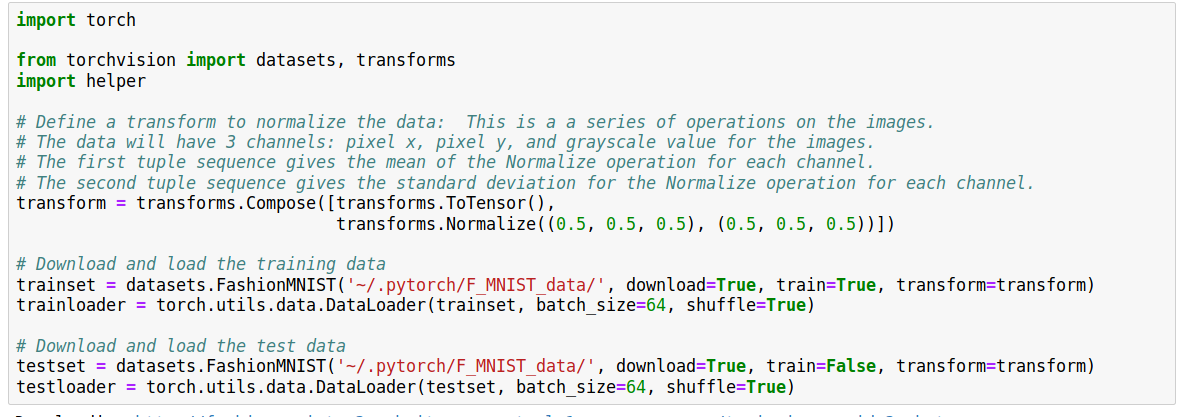

The same process is applied to the MNIST fashion data: